It is therefore questionable whether these approaches are still appropriate for all the modern issues and requirements of today. This consideration gave rise to the Data Vault modeling approach.

Challenges of classic Data Warehouses

In the Data Warehouse environment, there are two well-known modeling approaches according to Kimball and Inmon that have been used for countless years when it comes to storing data. However, these have to face more and more growing challenges:

New requirements

Larger amounts of data

Growing IT costs

What is Data Vault?

Data Vault is a modeling technique that is particularly suitable for agile Data Warehouses. It offers a high flexibility for extensions, a complete historization of the data and allows a parallelization of the data loading processes.

This hybrid approach combines all the advantages of the third normal form with the star schema. Especially in today's world, companies need to transform their businesses in ever shorter cycles and map these transformations in the Data Warehouse. Data Vault supports exactly these requirements without significantly increasing the complexity of the Data Warehouse over time. Unlike Kimball and Inmon, this eliminates the ever-increasing IT costs associated with extensive implementation and testing cycles and a long list of potential dependencies.

Procedure for Data Vault

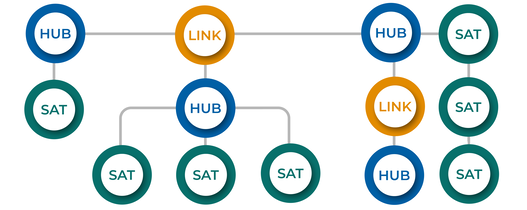

The Data Integration Architecture of the Data Vault approach has robust standards and definition methods that bring information together to use them in a way that makes sense. The model consists of three basic table types:

- Massive reduction in development time when implementing business requirements

- Earlier return on investment (ROI)

- Scalable Data Warehouse

- Traceability of all data back to the source system

- Near-real-time loading (in addition to classic batch run)

- Big Data Processing (>Terabytes)

- Iterative, agile development cycles with incremental expansion of the DWH

- Few, automatable ETL patterns

Marc Bastien

Conception of individual Analytics and Big Data solutions

Business Intelligence

Data Governance

Data Integration, ETL and Data Virtualization

Big Data, Data Lake & Data Warehousing

Dashboards & Reports

IBM Cloud Pak for Data

IBM Netezza Performance Server

IBM Db2

IBM Cloud Pak for Automation

IBM Cloud Pak for Application

Standardized data management creates basis for reporting

Operationalization of Data Science (MLOps)

IBM Planning Analytics mit Watson

IBM Cognos Analytics 11

Application Modernization

Application Development

Data Science, Artificial Intelligence and Machine Learning

Enterprise Architecture – Synchronising Business and IT

Talend migration in record time

Demand Planning, Forecasting and Optimization

IBM SPSS Modeler

IBM Watson Studio

Talend Data Integration

Talend Real-Time Big Data Platform

Talend Data Fabric

IBM Cloud Pak for Data System

IBM InfoSphere Information Server

Talend Application Integration / ESB

IBM Watson® Knowledge Catalog/Information Governance Catalog

vdek introduces new Data Warehouse solution

Microsoft Azure Synapse Analytics

Demand Management – Clarity on IT needs

FinOps Microsoft - Microsoft Cloud Cost Optimization

Jira Service Management from Atlassian

IT Controlling – Determination and allocation of IT costs

IT Asset Management – Reducing Costs and Risks Sustainably

Bamboo, Bitbucket, Sourcetree

FinOps Consulting

FinOps

Requirement Engineering

HCL Digital Xperience

TIMETOACT simplifies reporting in IBM Cognos

IBM Cloud Pak for Data Accelerator

Agile Software Development

Enterprise Content Management (ECM) & Archiving

Decision Optimization

Incident communication management

Enterprise Content Management (ECM) & Archiving

Database technologies

Interactive online portal identifies suitable employees

IT strategy – A clear goal and the way to achieve it

IT Service Management – Optimal support for IT processes

IT security – protection against cyber attacks

TIMETOACT implements integrated insurance software

Cloud Migration | Atlassian

Standardize and optimize project management | Atlassian

Anwendungsmöglichkeiten

Enterprise Service Management

Microsoft Azure

HCL Connections

Introduction of Jira to Hamburger Hochbahn

IT Sourcing & Vendor Management – Managing IT Partners

Mix of IASP & ILMT support for optimal license management

Atlassian Crucible

Atlassian Fisheye

Atlassian Crowd

IBM Cloud Pak for Data – Test-Drive

Databases with Open Source

Expedition zum Identity Management