This post describes a student group project developed within the Data Science Lab undergraduate course of the Vienna University of Economics and Business, co-supervised by Trustbit.

Student project team: Michael Fixl, Josef Hinterleitner, Felix Krause and Adrian Seiß

Supervisors: Prof. Dr. Axel Polleres (WU Vienna), Dr. Vadim Savenkov (Trustbit)

Introduction

In the previous blog post (which you can find here), we created an already pretty powerful image segmentation model in order to detect the shape of truck parking lots on satellite images. However, we will now try to run the code on new hardware and get even better as well as more robust results.

New hardware and out-of-sample data

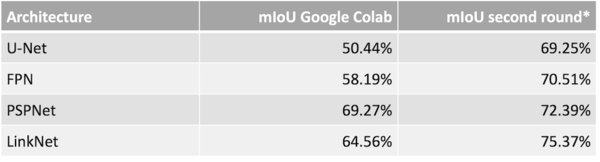

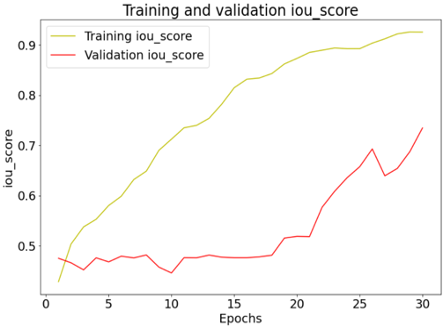

Up to now we trained all semantic segmentation models on Google Colab, which is advantageous as this assures everyone can reproduce the project easily but unfortunately the platform partly uses old package versions. In order to check out if the model training can simply be repeated on different hardware, we train the promising semantic segmentation models once again but with the newest package versions and on a high-end computer with an RTX 3090 graphics card. Hereby we can see that there can in fact be some randomness in the results depending on the hardware and packages used.

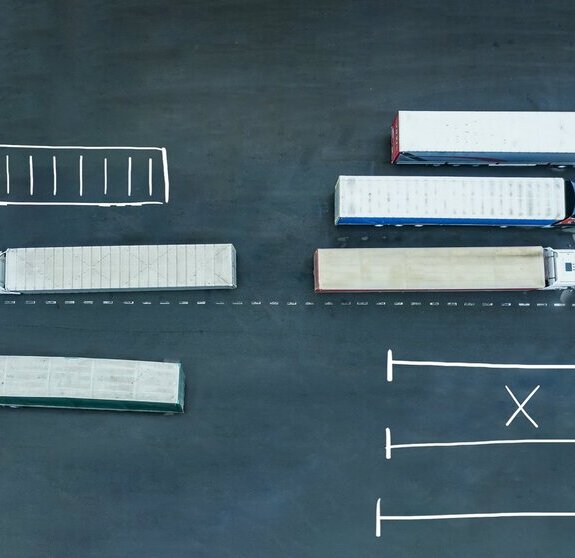

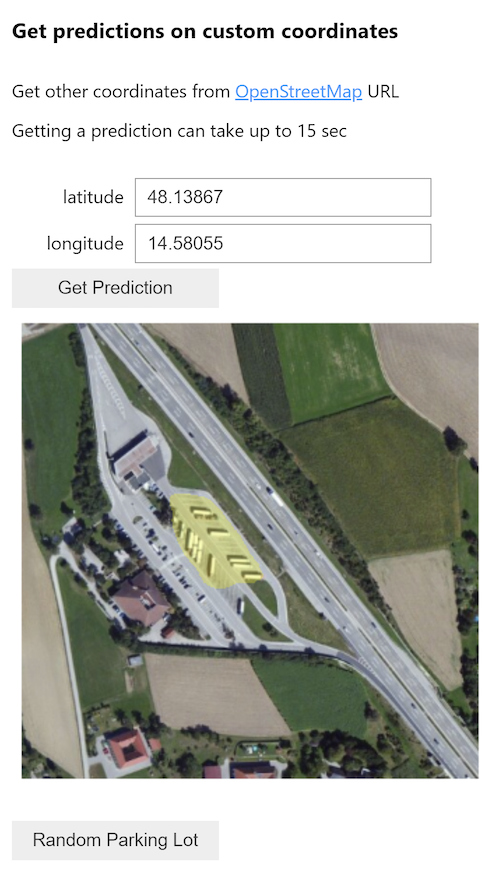

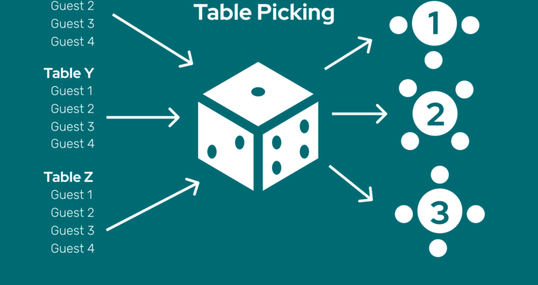

Let’s also check how robust our model is against more varied data, e.g. coming in different scales or from sources other than OpenStreetMap. Therefore, we made some experiments with unseen truck parking lots from Google Earth with various parking lot positions and sizes. Checking those images with the optimized PSPNet model from the last blog post shows that generalizability is not yet convincing, as you can see below.